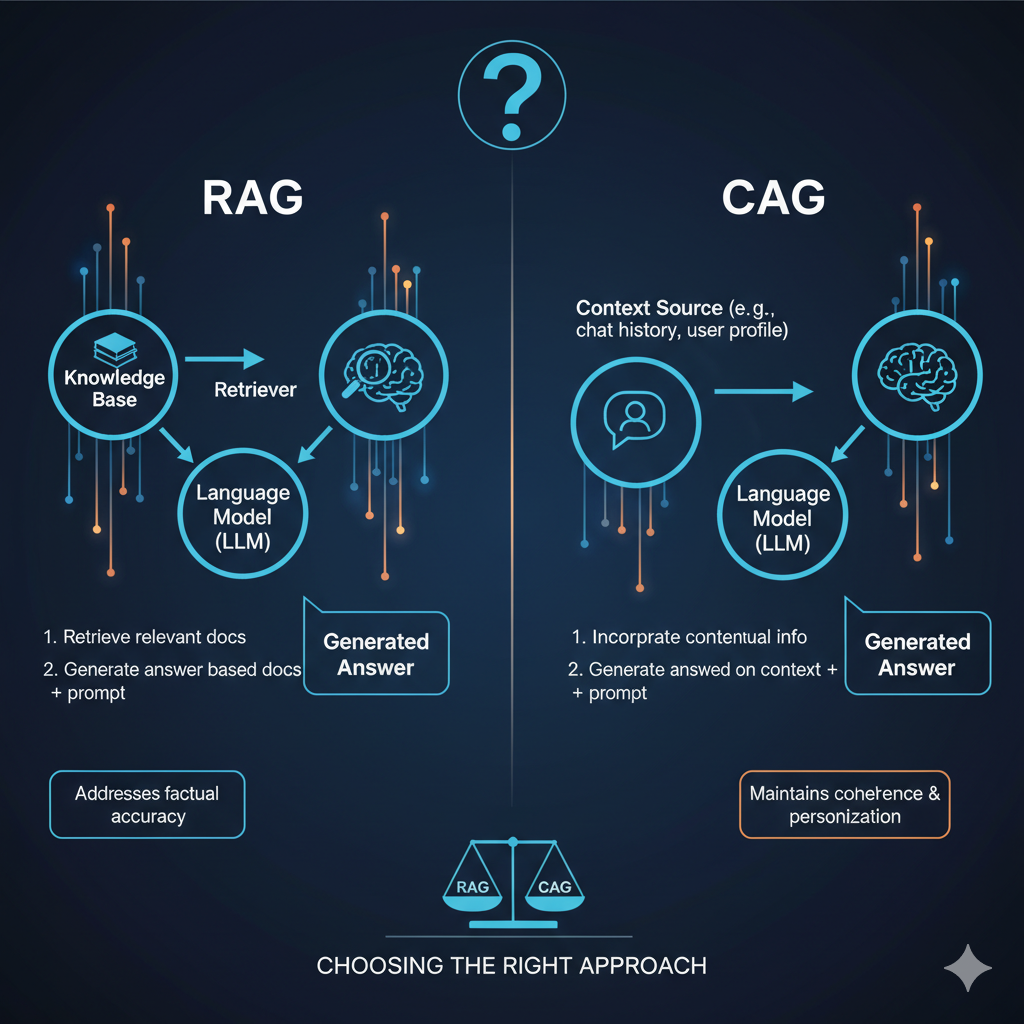

Generative AI has entered its next phase — one where context isn’t just retrieved but understood, structured, and evolved. The debate today is no longer just about Retrieval-Augmented Generation (RAG) but about its emerging successor — Context-Augmented Generation (CAG)

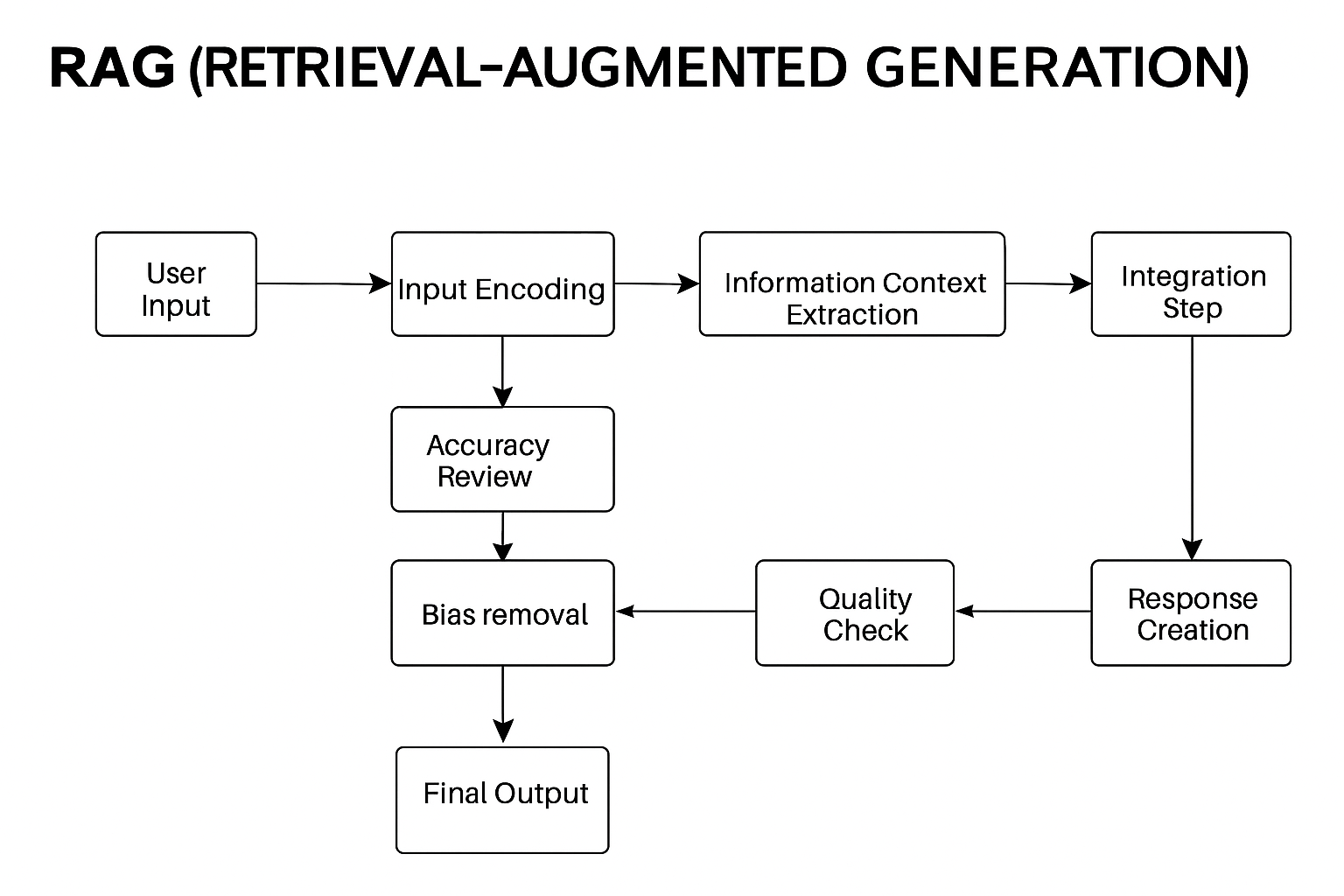

Retrieval-Augmented Generation (RAG) enhances LLMs by connecting them with an external knowledge base. When a user asks a question, RAG retrieves relevant documents and passes them as context to the model before generating a response.

It solves one major limitation of traditional LLMs — hallucination — by grounding answers in factual, up-to-date data.

- You ask: “What’s Microsoft’s latest AI initiative?”

- The system searches enterprise knowledge base, retrieves recent announcements, and the LLM generates a factually grounded answer.

Strengths of RAG:

- Dynamic, updatable knowledge (no need to retrain models)

- Transparent grounding and traceability

- Domain-specific adaptability

Limitations of RAG:

- Retrieval quality defines answer quality

- Context window limits and redundancy

- Lacks reasoning over multiple sources — it retrieves, but doesn’t synthesize deeply

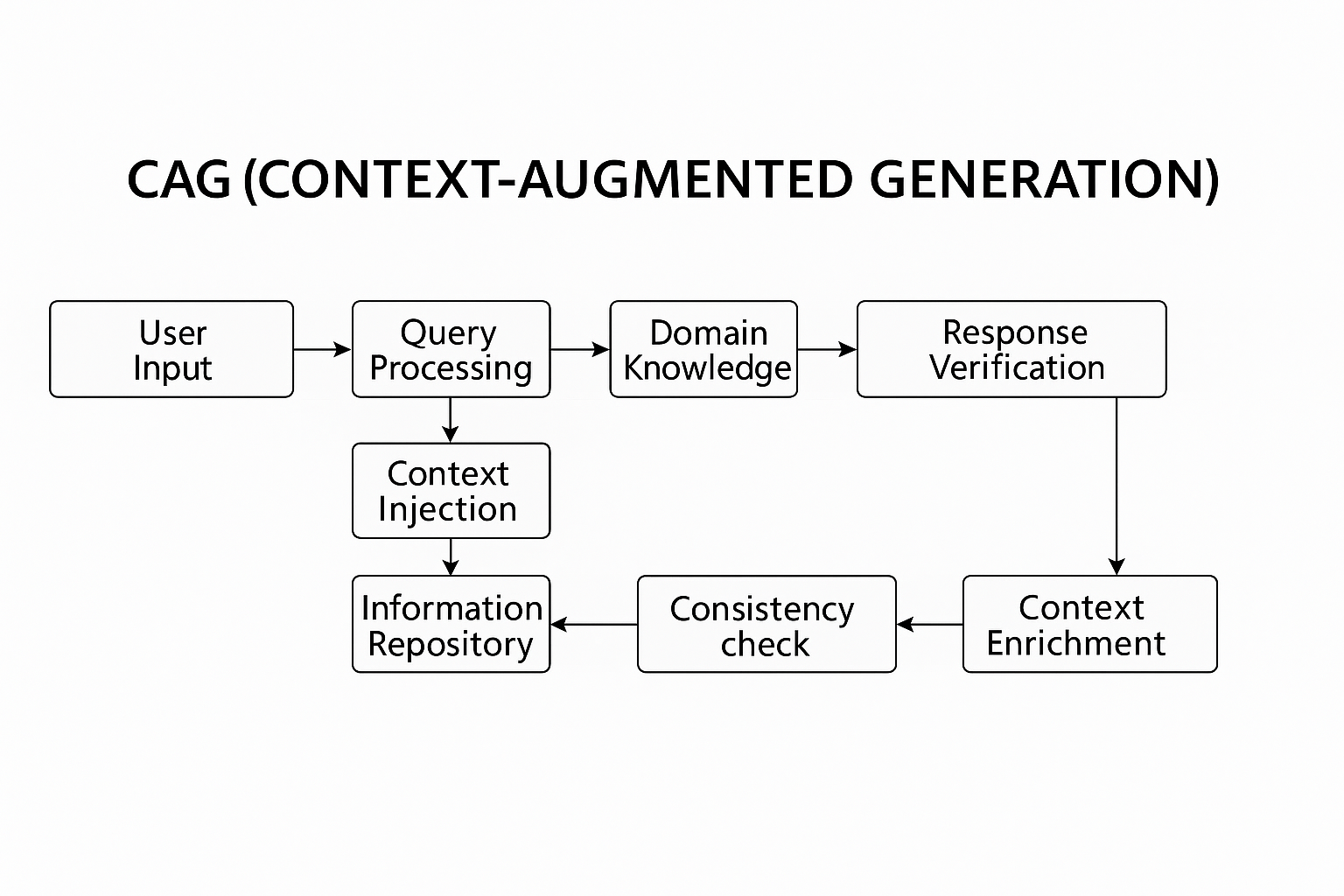

Context-Augmented Generation

Context-Augmented Generation (CAG) is the natural evolution of RAG. Instead of merely retrieving, CAG understands, structures, and prioritizes contextual information dynamically across different layers — memory, history, user intent, and environment.

Think of CAG as RAG + reasoning memory + context hierarchy.

How CAG Works:

- Retrieves information (like RAG)

- Understands the intent and conversation state

- Builds a hierarchical context — combining long-term memory, session history, and dynamic facts

- Generates adaptive, personalized, and goal-oriented responses

Key Differences: RAG vs CAG

| Aspect | RAG | CAG |

|---|---|---|

| Core Mechanism | Retrieves and injects documents | Builds multi-layered contextual understanding |

| Data Scope | Static or document-based knowledge | Dynamic, multi-source, conversational memory |

| Output Quality | Factually accurate | Factually accurate + contextually relevant |

| Adaptability | Limited to retrieved data | Learns and adapts over sessions |

| Use Case | Search-augmented Q&A, knowledge bots | Personalized AI assistants, enterprise copilots |

Why CAG Matters for Enterprises

As organizations integrate GenAI across CRM, HR, finance, and operations, static retrieval isn’t enough. Business context evolves in real-time. CAG allows AI systems to:

- Remember user preferences and organizational policies

- Adapt to ongoing workflows

- Maintain continuity across conversations

- Provide insights, not just answers

Imagine an enterprise copilot that doesn’t just “fetch” data from Salesforce or SAP — it understands your current task, recalls prior actions, and recommends the next step. That’s the promise of CAG.

RAG made AI grounded.

CAG makes AI contextually intelligent.